How To A/B Test Your Affiliate Links and Increase Commissions

-

Jesse is a Native Montanan and the co-founder and CEO of Geniuslink - or, as he likes to say, head cheerleader. Before Jesse co-founded Geniuslink, he was a whitewater rafting guide, worked at a sushi restaurant, a skate/snowboard shop, was a professional student, and then became the first Global Manager at Apple for the iTunes Affiliate Program.

- March 20, 2024

As you start hitting your stride with affiliate marketing and leveling up your skills, there is an important discipline to be explored – experimentation. Your affiliate links could be making you greater commissions if you can figure out what to tweak.

Trusting your gut is essential in the early days of your adventures in affiliate marketing, when “anything is better than nothing” and “perfect shouldn’t get in the way of done” reign true. Eventually, you may find that your head and your gut disagree on things. When this happens, it’s a good time to start running some experiments with your affiliate links to discover what truly performs best.

In today’s rapidly evolving world of affiliate marketing, there are dozens of things to test that can make a real difference to your bottom line. For example:

After Amazon’s commission rate changes, is Walmart a higher-yielding affiliate program?

Will using a Choice Page with multiple retailers convert better than using a direct link to Amazon?

Nike is a global brand and has regional storefronts, therefore will a geo-targeted link perform better than the affiliate links provided directly from CJ?

Experiments can take many forms and one we often hear about is running A/B — or multivariate — tests, on your website to see what converts best. If you have a website I wholeheartedly agree. It’s even something we’ve done on the Geniuslink website in the past.

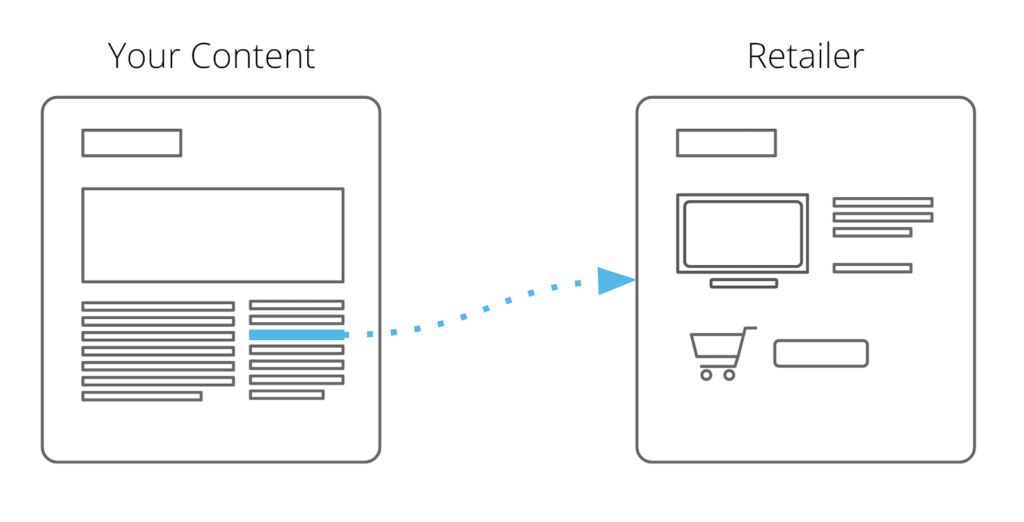

Traditionally, running experiments (via one of the various javascript-based testing tools) primarily focuses on making tweaks to your website. Today we are focusing instead on testing the destination of your (affiliate) links, which is managed via a link management tool (Geniuslink) and can be explored in parallel with your website optimization tests.

Also, quite simply, a javascript-based testing tool simply doesn’t work for those that share links from their YouTube channel, your Twitch stream, Pinterest, Facebook, and Twitter, so if you are looking to run experiments from your social channels then you’ll need to use a link management tool.

We are going to go step by step on setting up and running two experiments using the Geniuslink link management service — comparing how a Choice Page performs compared to a direct Amazon link and how a direct Nike link compares to a geo-targeted link.

The A/B Split Links

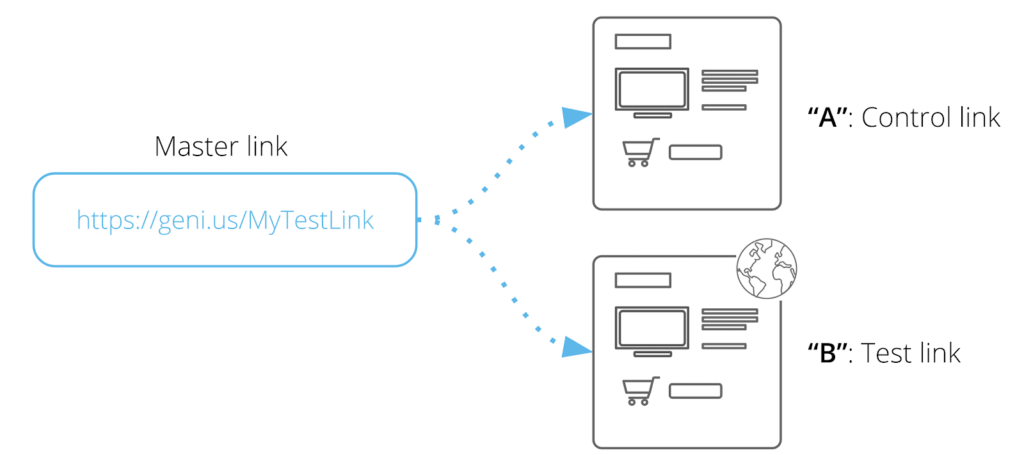

It was over four years ago when we built out the functionality for an affiliate link to randomly redirect a click to a specified destination a predefined percentage of the time.

This functionality sets you up to be able to send a portion of your traffic to your “control” destination (the “baseline” you are measuring against) and the remainder of your clicks to your “test” destination (the new “improvement” you want to compare against the baseline).

Using a single link that splits the traffic is advantageous in testing for two reasons. First, it ensures that the external forces that might impact the test are “normalized” (kept the same) so that the experiment doesn’t favor one outcome over the other.

This is convenient because you can run your test within one link, or set of links, instead of having to create multiple sets of links and try to ensure they are posted in a similar way, etc.

Second, by dividing your traffic, via a single link, you can run your experiment with both the “test” and the “control” at the same time.

As many affiliate marketers know, there can be significant seasonal changes in clicks, conversions, and commissions in a matter of months (for example, November and December are often the peaks where February and March are typically the valleys for affiliate related KPIs). A/B test links help keep these variables normalized.

While you can build a stand-alone A/B Split link with various definitions, you can also use them inside of an Advanced link. This is super helpful for when you want to narrow in your test to a certain subset of your traffic.

For example, if you wanted to compare how a change in your link destinations would impact only US and Canada traffic then you can do that.

Simply set up a rule in your Advanced link that applies only to US and Canada traffic and use the A/B test link functionality inside of that rule. The rest of your traffic could be sent elsewhere to ensure it didn’t muck with the experiment results.

Overview of an Experiment

In our experiments, we have seven distinct steps that we follow. We’ll be walking through each one individually, but I want to quickly introduce you to them (and you can use this to jump around as needed).

1/ Define your question – The best place to start is the beginning. This is where you spend a few minutes analyzing the situation and opportunities. It’s also where you define your hypothesis that you want to test.

2/ Plan your approach – In this step, you dig into your hypothesis and define your “control” and “test” variables then sketch out how you will run your test.

3/ Set up your links – This is where things start to get a bit technical and where having a good plan pays off in spades. It’s also where your Geniuslink dashboard gets put into action.

4/ Publish (and test!) – After you have your affiliate links set up it’s time to get some traffic running through your links. However, it’s always important to test your links early on to ensure everything is set up correctly and the experiment is running as it should.

5/ Wait – This part is easy. You need enough clicks (and sales) running through your test and control links that there is enough data to crunch. It’s only a matter of time.

6/ Analyze – Once you think the links have seen enough clicks it’s time to grab the relevant data and start comparing numbers.

7/ Review Results – After you’ve got all of your numbers in one place it’s time to make a decision. Did the data support your thesis or not? Are you confident in your results or do you need more data /time?

Ready to dig in? Let’s do it!

1/ Define your question

As we mentioned early on, today’s world of affiliate marketing is rapidly evolving and there are countless variables to test that can make a real difference in your revenue. We had three questions that represent numerous inquiries we get from our clients. These included:

After Amazon’s commission rate changes, is Walmart a higher-yielding affiliate program?

Will using a Choice Page with multiple retailers convert better than using a direct link to Amazon?

Nike is a global brand and has regional storefronts, therefore will a geo-targeted link perform better than the affiliate links provided directly from CJ?

Turns out we’ve actually been testing the last two and will share our notes, data, and results through the rest of this blog! (With regards to the first question, we are firm believers that you shouldn’t be making “either/or” decisions with using Amazon’s affiliate program but should use it in combination with other programs, and this is something we can help you set up to test for yourself!)

Take Notes!

The first thing you want to do once you’ve identified that you want to run an experiment is to start taking notes and saving them in an easily accessible place. Just as a scientist will always have a journal by their side, your notes are an important part of your exploration.

Research

Before you get started on your experiment you need to become as knowledgeable as possible about your content area. Spend a little time digging in and learning what you can.

You may find that someone has already done a similar experiment that you can recreate or take inspiration from, or you may find that their results just don’t add up. The gist is the more you know as you build your experiment the more insightful your learnings will be.

State a Thesis

Once you know what you want to test and have developed a plan for how you’re going to record the journey it’s time to declare what you think will happen. This is your thesis and an important part of beginning any experiment.

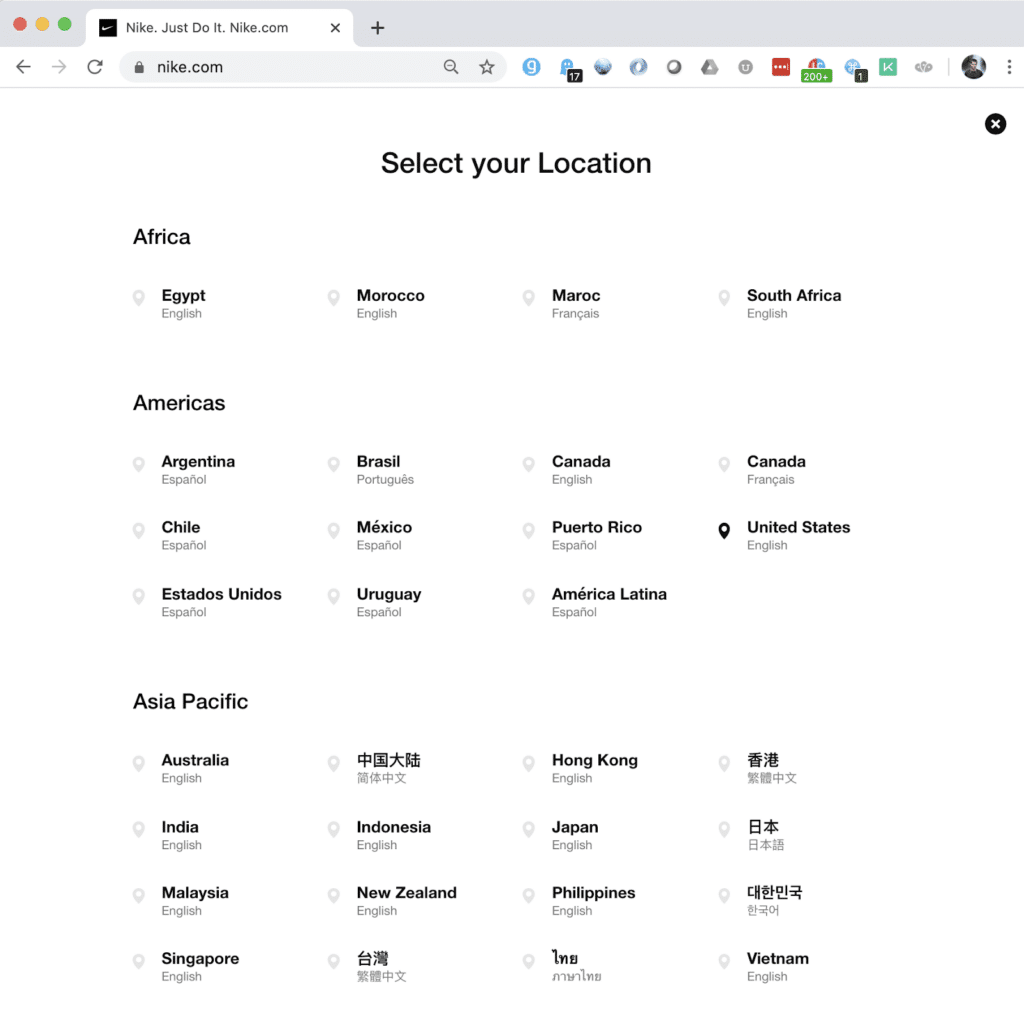

Experiment 1- Should you be localizing links from an affiliate program (like Nike)?

Many global brands support their international shoppers by creating regional and country-specific storefronts that are optimized for each audience. On top of that, they often create affiliate programs that are specific to each storefront, not the brand as a whole.

This is exactly how Amazon is set up now and how iTunes and Microsoft were set up back when we first started supporting them. We’ve proven time and time again that a geo-targeted solution for these three retailers leads to significantly higher conversions and commissions. Can the same be true for other major brands that have affiliate programs?

We were asked that exact question by our friends over at AWIN, specifically about one of the most well-known brands in the world — Nike.

Nike supports nearly 80 different storefronts around the world and, unlike Amazon, they have some basic geo-targeting built into their stores. For some products and countries, Nike can redirect a shopper that clicked an affiliate link for the wrong regional storefront to the correct product in their local storefront.

Nike also has separate affiliate programs supporting the majority of their regional storefronts, however, there is some fragmentation. For the US Nike store, the affiliate program is run through the CJ affiliate network. However, the rest of the Nike stores have affiliate programs managed by AWIN. This means that a purchase from the UK Nike store won’t earn a commission if the click originated through a CJ link. Conversely, a US Nike store conversion won’t yield a return if the shopper started with an AWIN link.

Unfortunately, most affiliate publishers using Nike’s affiliate program only sign up for one program and only build affiliate links for one specific storefront. This leads to the question, will a geo-targeted link (that sends non-US traffic to AWIN) perform better than the affiliate links provided directly from CJ?

Since we had seen great success with adding geotargeting into the affiliate links of global brands with fragmented affiliate programs, our thesis became: “Localizing a Nike affiliate link will lead to more commissions and a higher conversion rate.”

(A huge thanks to WearTesters for allowing us to share the data from our Nike link experiment!)

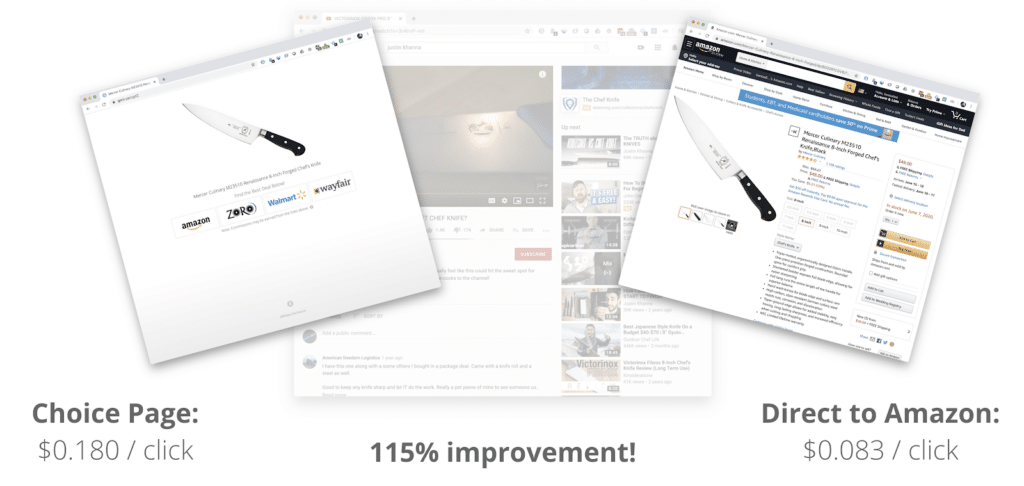

Experiment 2 – Are Choice Pages better than direct Amazon links?

There has been a lot of angst in regards to Amazon’s affiliate program lately, especially after their significant cuts in commission rates for a third of the program.

This has pushed affiliates to consider ways to not only boost conversions for their Amazon affiliate links but also start to diversify their affiliate portfolio by utilizing additional programs.

We at Geniuslink have been working on a solution to this exact problem for some time.

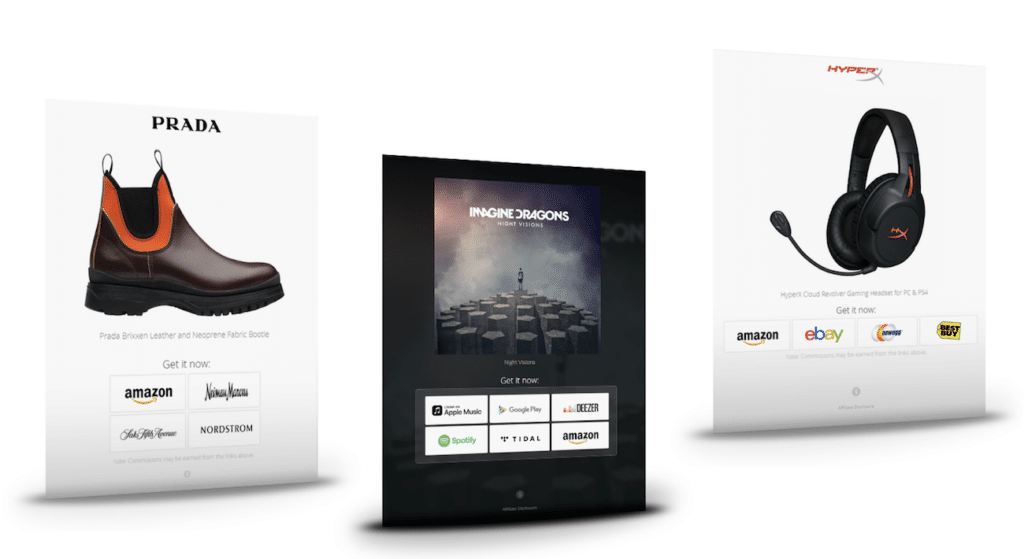

We recently pushed a major update to our Choice Pages to simplify the process of building a multi-retailer, mobile-optimized landing page to promote a specific product.

While the music industry — and now the book industry — tend to be fully reliant on these style of landing pages in their marketing, the question is if this approach makes sense for affiliate marketing focused on physical products.

We have lots more functionality planned out for this feature, but we were curious to see the results from Choice Pages in their current state. Does the extra click, and additional buying options, help or hurt conversions and affiliate revenue?

While we are biased and believe they will, we wanted to be as impartial as humanly possible and run some experiments on the success of Choice Pages, in their current state, compared to a direct Amazon link.

Justin Khanna, a professional chef and YouTuber, was eager to join us for this test and graciously provided access to his links.

With this in mind, our thesis for this test is: “A Choice Page, optimized for the US, will perform better than an Amazon.com link.”

2/ Define how you are going to test

Once we have written down a few notes on what we want to test and have stated our thesis, it’s time to dive into actually building out the framework for the test. Again, these steps should all be recorded alongside your initial notes.

Control vs. Test

The first step in building out our experiment is defining what’s getting tested. This should be done as scientifically as possible to ensure external factors don’t influence your results. When you perform your experiment you want to filter out everything but the specific variables you are testing for. It’s also important that you have two (or more) things to compare against. Finally, one of those things should be your “status quo” and should accurately reflect how things would be continued normally, so that you can accurately gauge how much better (or worse) your second option is.

The “Control” in your experiment is this status quo, the model of the existing behavior, and what you will measure the “Test” results against.

The “Test” in the experiment is the new thing you think has potential and you want to measure.

Taking our two examples from above, let’s break down what the test and control might be.

Thesis: Localizing a Nike affiliate link will lead to more commissions and a higher conversion rate.

In this case, the control would be the standard direct Nike affiliate link provided by CJ where the test would be a localized Nike link.

Thesis: A Choice Page, optimized for a specific region, will perform better than a direct Amazon link.

For this test a direct Amazon link, for a specific country’s traffic, would be the control where the test would be a Choice Page, again for the same specific country’s traffic.

Testing for Specifics

To make sure your test is only measuring what you want, you may need to filter out the noise. For example, in both of these experiments we want to isolate the audience you are testing for.

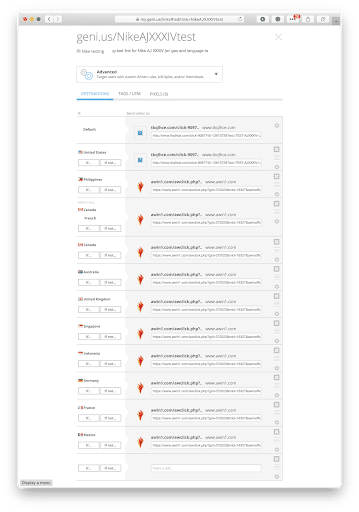

With Nike, we aren’t going to build a link with the correct product in every one of Nike’s regional storefronts (~ 80!). Instead, we’ll identify the top ten countries that cover the bulk of the traffic and build our test around localizing affiliate links for those countries. This will save significant time in setting up the test and the results should be in line with what we’d expect from doing all of the storefronts.

For Choice Pages, we want to test for US-specific traffic. As a result, we’d only direct clicks coming from the US to our A/B split that we’d be using for the test.

When we are testing the performance of the destinations of our affiliate links via the A/B testing link, we can actually nest this inside of an Advanced link to filter out the clicks that we don’t want for the test. We cover this in more detail in the next section.

Define your Metric

At this early stage, it’s also important to know how you will measure your test results. What is the KPI you will use to compare your test results versus those coming from your control?

For affiliate performance, I’m a huge fan of Earnings Per Click (EPC). EPC normalizes the results for your clicks and pulls in the most important metric, the total commissions.

Normalizing for clicks is really important! Even with a closely controlled A/B test, with a 50/50 split, you may find that your test or control may get slightly more clicks than the other. Further, comparing EPC also allows you the luxury, to continue the test at a later time with traffic skewed to send more clicks to the destination that is performing better. This grants you the financial upside during the test while maintaining the ability to learn, or at least verify your learnings, from an experiment.

Your total commissions are what this is all about in the end so it’s important to factor those in beyond just EPC.

Test size

Another important decision to be made in the early stages of setting up your experiment is around how large you plan to make this experiment. Are you planning on only running a test on a link or two, or lots of links, or maybe a bunch of links grouped together, or across a whole channel, digital property, or site?

Besides thinking about the breadth of the experiment you also have to consider the time you have to set up and run the experiment.

To help answer the size question you need to think a moment about some of these other questions:

How granular do you want your experiment and results to be? The more granular your experiment set up the more insights you can pull out, however, it’s more time-intensive to set up testing on an individual link level compared to testing links as a group.

How much time do you have to set up and run the test? If you have limited time to set up the experiment then testing a group of affiliate links together may make the most sense. If time isn’t a constraint then building a large number of individual links would be ideal.

Further, how eager are you to get results? If you want results sooner than you might be better off testing a larger number of affiliate links as a group so that the total traffic runs through your experiment faster. However, if you aren’t in a hurry for the results then you might get away with running an experiment with a few affiliate links but over a longer period (say three months instead of just one month) to get the critical mass of clicks and sales you need to get accurate results.

Do you get enough traffic from the countries you want to run the experiment for? The amount of relevant click traffic running through your experiment is also a major consideration. If you are only testing a small subset of your traffic then you’ll need more time (or clicks) to get your results.

If the bulk of your traffic is from the country that is most important for your test you have little to worry about (as is the case for our US test of Amazon direct links versus the Choice Pages). However, if you are looking to test for the value of localizing affiliate links but only 10% of your traffic is international, you’ll need your experiment to run longer, or include more links so that you get a critical mass of clicks that are relevant to your experiment.

Write it all down!

Keep taking notes! Don’t forget to write down your thoughts to the topics you’ve explored during this phase. These notes will be essential in later phases as you explore the validity of your results and how to move forward.

3/ Set up your links

In my opinion, this is the most fun part of the experiment, however, it can also be the most intimidating if you haven’t followed the first two steps. Eagerly jumping right into these steps can lead to mistakes that often only become apparent when you’re analyzing your results.

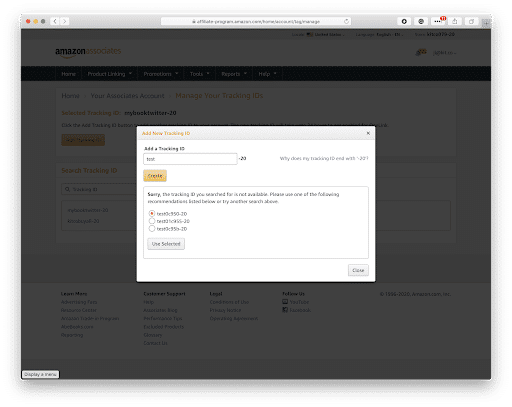

Tracking IDs vs. SubIDs

An essential aspect of running an experiment is differentiating your normal clicks, sales, and commissions from those of your experiment, and then inside your experiment from your test versus your control. This can be done by careful use of SubIDs, or in the case of Amazon, Tracking IDs.

If you aren’t familiar with SubIDs, they are a godsend for taking your affiliate game to the next level, even outside of running experiments. The gist is that they allow you to assign an arbitrary value to your affiliate links then see the clicks, sales, and commissions that are associated with that arbitrary value. This can be super helpful if measuring the performance of different properties, or different placements of affiliate links, etc. Your implementation can be fairly basic and just include a few or you can go crazy. For example, the detailed use of SubIDs is how various rewards programs are managed for the various cashback sites to ensure you are rewarded.

A great resource for better understanding SubIDs and how each affiliate network implements and supports them can be found in Affluent’s blog: The Definitive Affiliate Sub-Campaign (SID) Tracking Guide + Cheat Sheet.

The kicker with Amazon’s affiliate program is there isn’t support for SubIDs except for a limited few publishers that have been blessed with “subtags” as Amazon calls them.

This additional layer of tracking isn’t available to the majority of us and requires an Amazon account manager granting you access. However, the good news is that Amazon allows you to generate up to 100 unique tracking IDs.

So while you can’t arbitrarily assign a tracking ID for each link, you can quickly create the IDs you need for an experiment ahead of time then use those. It’s not as flexible, and certainly not as convenient, but it works.

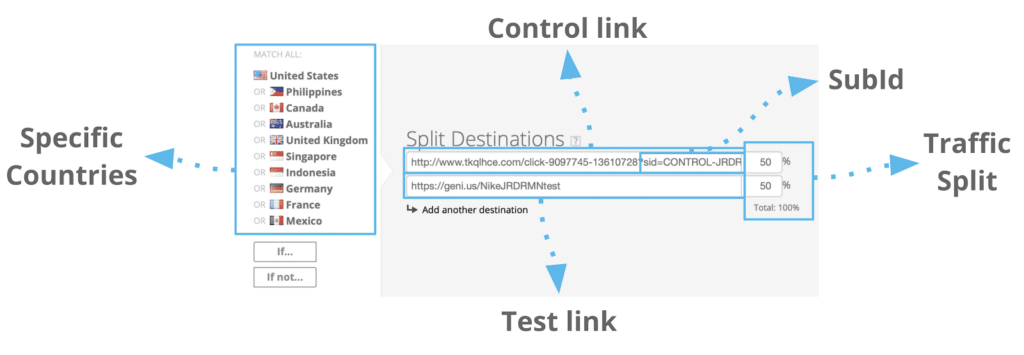

For non-Amazon links, you’ll want to use SubIDs to independently identify your test and control links at a minimum, but you can also take this a step further and include some other information in the SubIDs that may be necessary later.

Nike Localization example:

For the Nike test, we took the more granular approach and set up each link with its own subID tracking. Each link had three bits of information we wanted to track. This included “Test” vs. “Control” the link/shoe the test was for, and what country the link was associated with.

TEST-ZKD12-US

TEST-ZKD12-PH

CONTROL-ZKD12-US

There were obviously 10 different test links for the different countries we tested for.

For this particular test, one thing I would have done differently would be to put the link/shoe code before test/control so that I could more easily sort the results in the exported spreadsheet.

Multi-Retailer example:

To keep the organization as simplified as possible, the tracking IDs we built inside of Amazon mimicked the link code we used. For example:

https://geni.us/VictFibrAZControl used the tracking ID victfibrazcontrol-20.

https://geni.us/VictFibrAZChoice used the tracking ID victfibrazchoice-20.

Further, since we had multiple retailers in this experiment, we used the affiliate aggregator Sovrn Commerce to affiliate the other retailers. To keep track of each link we used a similar tracking ID but then added on the additional two-letter code for each retailer in that bunch. For example, VictFibrAZWM was used for a Choice Page that included both Amazon and Walmart. To help keep things simple, we then named the link after the tracking ID.

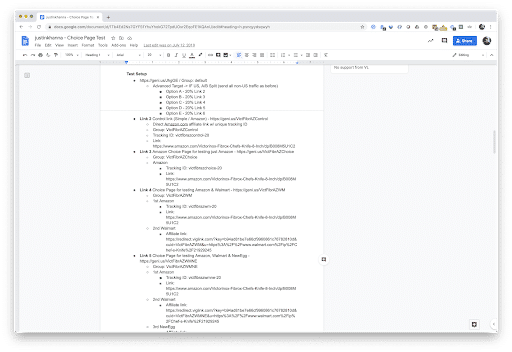

Draft first

Before you get overwhelmed with figuring out what SubIDs / tracking IDs to use, it’s best to sketch out your experiment using the information from the first two steps. This can be done in a couple of different ways.

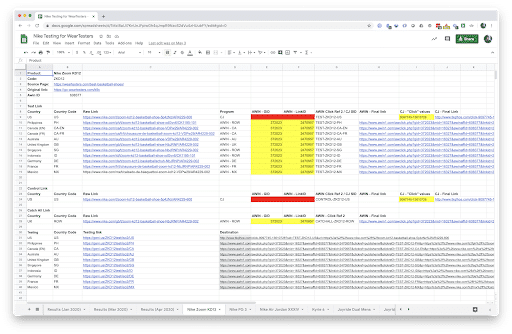

For the Nike localization test, we used a spreadsheet that included columns for the country, link destination, tracking information, subIDs, and the final, fully affiliated destination link.

For our multi-retailer / Choice Page test, we took a different approach and sketched out everything in an outline in a Google Docs document using the first round of indents as a destination with the necessary details for the affiliate link’s expected behavior written out below.

Don’t worry about the format, the goal is to just get your thoughts down on paper so you have a plan when you start to build the links.

Building the links

We’re almost to the fun part. Just one last quick dive into theory before we get our hands dirty building the links.

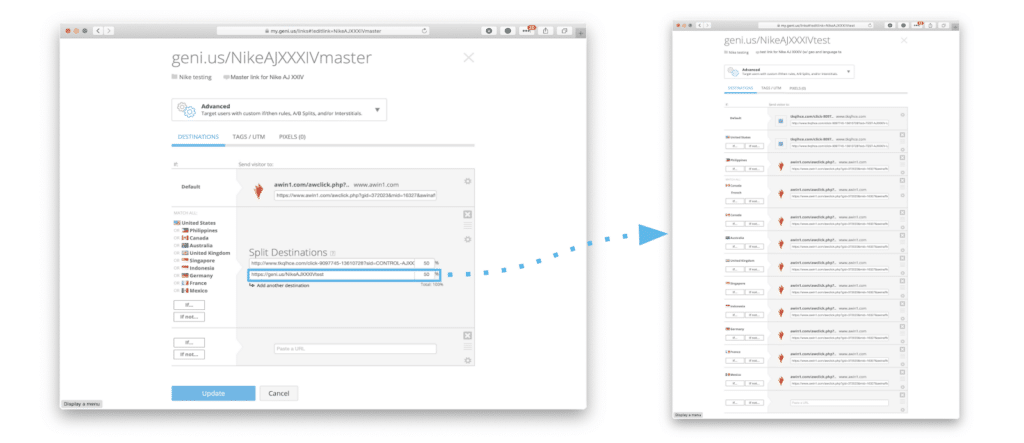

Introducing the “Master” link

Ready for some Inception level fun?

We will need to “nest” one geni.us link inside the other in order to take full advantage of Geniuslink’s link management features.

This step ensures we are filtering out unnecessary clicks and using the correct tracking IDs. For experiments where you are comparing the performance of multiple affiliate links, you’ll want a single link that you’ll publish.

This is the Master link and it’s the one that redirects traffic to the multiple different test and control links.

Using a Master link creates a “clean room” for the experiment by helping ensure that external forces and seasonality don’t skew the results of a test.

Using a Master link, with other geni.us links nested inside means that one geni.us link may instantly redirect to another geni.us link before getting passed to the appropriate destination.

Step 1- Building the Test Link

For most experiments, the “Test” link is often the newer, and likely, more “complicated” link (what you are theorizing will convert better for you). It’s best to build this link first, as you will be nesting it inside the “Master” link.

For our Nike localization experiment, the Test link was a localized Nike link. The way we created a localized link for Nike was by using the “Advanced” link format where we can identify different factors — such as geography, language, device, operating system, browser, and date — that will impact how a link resolves.

Thanks to our helpful spreadsheet, we now have the various destinations we need for each link so this next step is simply setting up the per country logic we need the link to follow, then copy and pasting each destination into the correct spots.

Step 1.1 – Building the Control Link

Often the Control link is simply a direct affiliate link and therefore may not need any of the additional functionality that the Geniuslink link management platform offers.

However, it’s important to note that if you are using an iTunes / Apple Music link or an Amazon link in your experiment that you’ll want to create a separate link or group for the Control to ensure that your Test or Master link metrics don’t bleed over into your Control analytics due to the auto-affiliation and link localization that is built into the Geniuslink platform. Make sure your Control link has its own Group and Affiliate Overrides.

Step 2- Building the Master Link

The “Master” link is the one that is going to be published (or is already live) and where the A/B split link functionality is placed.

For a simple experiment, where we don’t need to filter anything out, the link type would simply be an A/B split link, however, for more complex experiments, where you need to filter out specific traffic, you’ll want to use an Advanced Link, and then set a specific rule to define exactly what traffic you want to follow through to be experimented with.

In our Nike example, we only wanted to do the experiment for traffic coming from one of 10 countries so we built an Advanced Link and set an “If” rule that included the 10 countries.

Clicking the gear, inside the first set of rules, we get the option to do an A/B Split Destinations link where we can drop in links to our test and control links for the experiment.

We also have a “Catch-all” link here that is the default. This is the link that is used for any traffic that doesn’t match one of the defined rules. So in the Nike example above, a click from Japan, which isn’t one of the 10 countries listed, would get sent to the default destination.

The following diagram should help bring it all together:

And to see it at a high level:

50/50 Split?

While the default for an experiment is to evenly distribute your traffic between the test and the control, this isn’t required. In fact, you can tweak the ratio however you’d like.

For example, if you are feeling cautious about disrupting your existing link set up but still curious you could just set the test at 20% and leave your existing model (the control) at 80% of the traffic. When you normalize the earnings per click this difference in volume can be accounted for.

Write it Down!

The link setup can get fairly technical, fairly quickly, so don’t forget to take down notes to record the process. It’s way easier to take the notes during — or immediately after — link creation than trying to remember why you made a specific decision many days, weeks, or even months later.

4/ Publish (and test!)

Once you’ve got your affiliate links built exactly how you want it’s time to get them published, and most importantly, tested to ensure they are functioning exactly as you expect.

New vs. Existing Links

Depending on your experiment and your game plan you have another crucial decision to make — are you going to publish new links or update existing links?

Creating new affiliate links works great for social media posts where there is a short half-life to the post (Twitter and Facebook). New links also work well for email. When testing with new links, you don’t have to pay as much attention to the time period of the traffic, since there will be no click history on the link that could possibly cloud your results.

However, updating an old link, either to become your Master link or redirect to it, works really well for more evergreen content. This includes affiliate links on your blog or YouTube descriptions.

The benefit of using an existing link is that you should already have a good gauge of the traffic levels and breakdown so you can make a more educated guess on the strength of your test. The other benefit is that your test can start immediately since you’re making updates to an already established link that gets traffic.

Testing Your Links

Once your link is published it’s important that you test it from end-to-end to ensure everything is working exactly as it should.

The devil is in the details here and it’s important to be diligent in your testing — I can’t emphasize this enough! It’s possible to ruin an entire experiment by not catching a simple mistake early on.

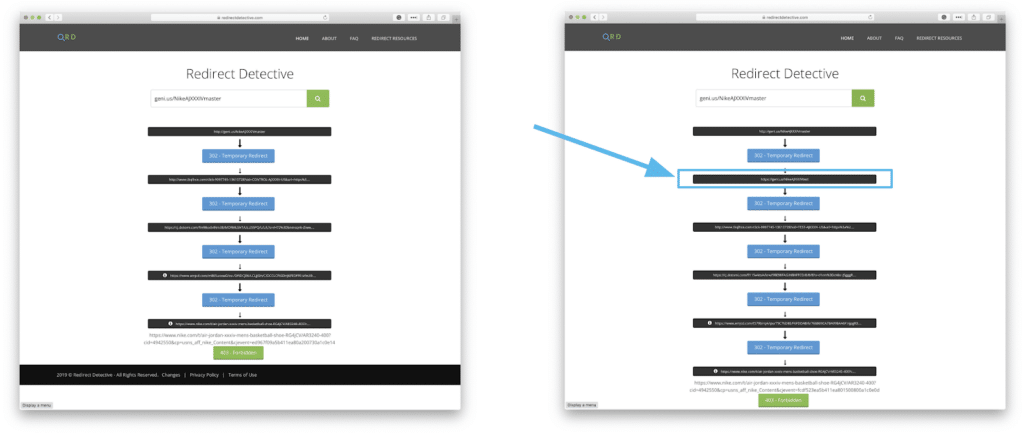

Because you have multiple links working together — and for your specific case, the end result is likely the same—it’s advisable that you are using a third-party tool to monitor the links so you can observe the individual steps.

For example, on the Nike test, since I’m in the US I would be directed to the US Nike store regardless of if I took the Test path or the Control path. However, by using a tool to track the resolution of the affiliate link, I can see the individual steps taken in the resolution of the path.

The following screenshot shows how in the Test path there was an extra step.

My personal two favorite tools for testing include the site Redirect Detective and the Chrome extension Link Redirect Trace.

Besides checking the link resolution path to ensure that some clicks go the test route and others go the control route, you’ll also want to take a peek at the URLs in the process to ensure they include the correct tracking ID or subID.

Please note that our built-in “/iso2/XX” testing hack may not be enough to test the behavior when you are nesting geni.us links as it only works for the first geni.us link. You’ll need to use a proxy / VPN for testing in other countries where your location matters to both the Master and the Test links.

Checking for Clicks and Sales

Once you have published your affiliate link(s) and confirmed their redirect behavior, the next step is to ensure everything is tracking.

It’s best to check the tracking from within the Geniuslink dashboard first, where clicks are reported in nearly real-time.

However, once you’ve done that you’ll want to log into the affiliate network dashboards associated with the affiliate links you are testing.

You might want to wait a day after you’ve published the links to ensure the data has had time to flow through. At this point, you are only looking for clicks since sales typically take even longer to start reporting.

Making sure that clicks are registering is your first goal, but what is most important is that they are registering for the specific tracking ID or SubIDs that you set up in the test!

If you are seeing clicks for all of the tracking IDs or SubIDs that you set up, you can safely assume that when a sale happens it will be correctly reported.

It’s also important to note that you shouldn’t get hung up on any reporting differences between the affiliate network and your link management tool. Unfortunately, it seems every tool measures a “click” slightly differently and as long as we stay consistent with where we grab click numbers (another benefit of using a trustworthy link management tool) then it doesn’t matter how many clicks the affiliate network registers.

The primary concern is that they are registering clicks (and thus, in theory, will register sales and commissions as well).

5/ Wait…

Depending on how patient you are, this is the easiest (or hardest!) part of the experiment. Now that you have everything set up, you need to give your affiliate links time to do their thing so you’ll have meaningful data in the end.

The length of waiting totally depends on the experiment. If you are testing two very distinctive behaviors between the test and control cases then you’ll need less traffic and/or less time to get to a point where you have meaningful data.

On the other hand, if the difference between the two affiliate links is subtle then it will take more time, or traffic, to get to a point where you have enough data to make a call.

The other thing that directly impacts the time you have to wait is the click traffic. If you have very little click traffic then you’ll need to wait longer. If you have a ton of traffic going through your Master link then your wait time can be significantly shorter. This goes back to our discussion early on about if you want to test links individually (takes more traffic or time) or as a group (as you are combining the traffic the wait is reduced).

As a general rule of thumb, we recommend at least 1,000 clicks for each test and control links and/or letting the test run for about a month.

Ultimately, the goal is statistically significant results, which we’ll dive into on the last step.

6/ Analyze

Once you think you’ve had enough traffic run through your affiliate links — or at least want to check in to see how much longer you should wait — it’s time to grab some data and look at the results.

Define the Dates

The first important thing to do in pulling your data is to define the date range for the data you will be looking for.

Your start date should be at least one day after you started the experiment. This will help filter out all of the test clicks you made after you initially published the master link for the experiment.

Your end date should take into account the normal lag for all the sales reporting to filter in. With Amazon, for example, they have a short 24-hour cookie window, and sales/commission reporting is the next day. However, Amazon can be quite long if you want to ensure you capture ALL of the sales that came from your link due to the 89-day shopping cart window.

On the flip side, if you are using a service like Sovrn Commerce or Skimlinks then your sales/commission reporting might be delayed multiple days or possibly even a week or more.

It’s important to take these factors into consideration when you set the end date for the data pull. You certainly don’t want to use the date that you’re pulling the report. Instead, use a date four or five days prior.

Once you’ve decided on the date range be sure to write it down. Having your date range handy is super important for the next two steps.

Grab Click Data

As we mentioned earlier, click counts between various tools can be all over the place for the exact same campaign.

For this reason, be sure to use the click data for Test and Control that is provided by your link management platform.

It doesn’t matter if these numbers don’t perfectly match the reports provided by the affiliate network. Just compare the clicks reported by your link management platform.

Don’t forget to double-check the date ranges!

Grab Commission Data

Once you have the click data it’s time to grab your commission data. This comes directly from the various affiliate networks or programs that you were using in the experiment. Again, be sure to set the correct date range for your report.

Two easy mistakes to make:

It’s important to note that you want your total commissions, not the overall sales data (as you are likely paid out at different rates from different programs).

If your experiment includes an international aspect you’ll want to double-check the currencies your commission is being reported at and then convert those back to a common currency so that your EPC calculations are all using the same currency.

Look at Earnings Per Click

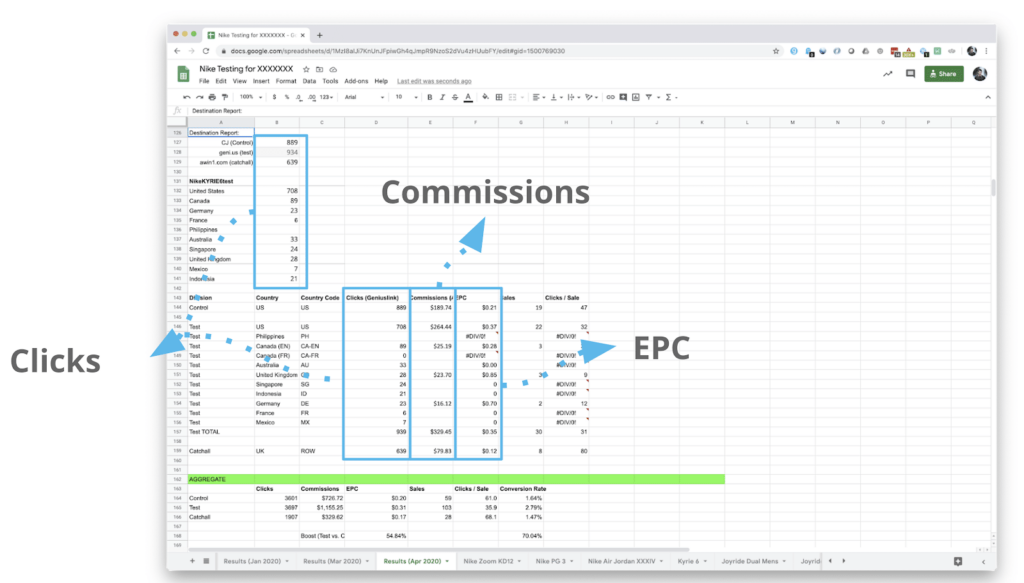

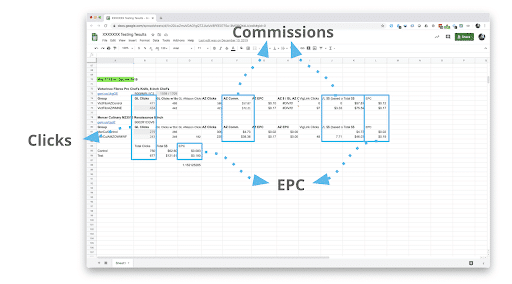

Once you have your click data and commission data pulled up it’s time to organize them into a spreadsheet so you can calculate your EPC.

How you organize your spreadsheet is a matter of personal preference. I tend to love spreadsheets so mine are a bit more complex (see examples below) but you only need a few columns:

Clicks – for both the test and the control links/groups.

Total Commissions – for both the test and the control links/groups. In our experiments, we were comparing a single link to an affiliate program compared to one that had multiple options so we will need to sum up the commission from multiple programs to get a total.

To get your EPC number you’ll want to divide your total commissions by your clicks.

If you had 1,000 clicks and earned $100, then your EPC would be $100 divided by 1,000 and would be equal to $0.10.

For our Nike test, our spreadsheet to track the clicks, total commissions and to calculate the EPC looked like this:

For the Choice Page / Multi Retailer experiment the spreadsheet looked like this:

7/ Review Results

And?!? The moment of truth sits in those EPC numbers you just calculated. Which was higher, the EPC for the Test or Control?

From our experiments, we found that the localized Nike link had a 54.84% higher EPC ($0.20 for the control and $0.31 for the test) showing that our thesis — “Localizing a Nike affiliate link will lead to more commissions and a higher conversion rate.” — was correct!

With our experiment for our Choice Pages compared to a direct Amazon link, our experiment showed that the multi-retailer Choice Page increased EPC by 115% ($0.180 for the test and $0.083 for the control). This also proved our thesis — “A Choice Page, optimized for the US, will perform better than an Amazon.com link.” — correct.

Statistical Significance

While you can usually get a gut feel from the EPCs, a final step is to check your numbers to see if you’ve achieved “statistical significance.” While it’s a bit of a mouthful, a statistically significant result is one that could not have come from random chance. Put another way, statistical significance measures the confidence that the difference in results is attributable to “a” difference in your test variant compared to the control variant.

However, it’s important to note that this may not measure “THE” difference in results depending on the way the test is set up. As a result, be sure to take the results with a grain of salt.

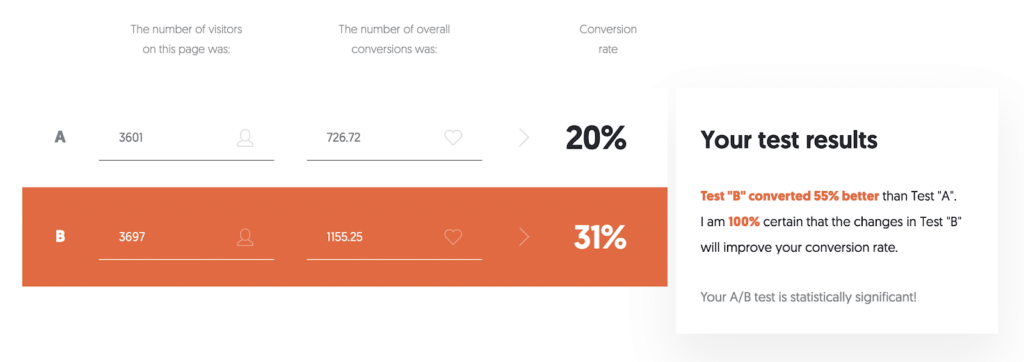

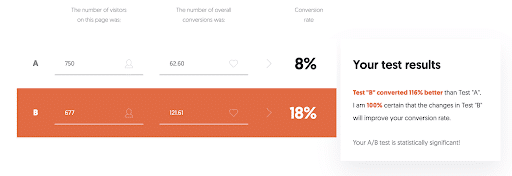

There are numerous online A/B Testing Calculators but the one we prefer is from the infamous Neil Patel titled simply A/B Testing Significance Calculator. For these tools, we used clicks instead of the number of visitors, and instead of the number of overall conversions, we used total commissions earned.

Using this tool for the Nike experiment, we got the following results:

For the multi-retailer / Choice Page experiment the results were also statistically significant:

Control Wins?

If your results come back as nearly the same you should absolutely not consider the experiment as a failure, but rather as a learning opportunity. Further, knowing that whenever we can learn something, it’s a success. Knowing what doesn’t work is just as important as knowing what does.

“I now know 999 different ways that won’t work.” – Thomas Edison (source)

This is a good time to go back to your notes and read through. Were you testing what you wanted to be testing? Was it a good thesis that you were working off of? Is there a better way to set up your experiment?

It may be worth running a variation of the experiment based on your new insights. Or it may be worth moving on to your next experiment knowing that this hypothesis didn’t work out.

More Time?

While your experiment may have come back with a clear conclusion, either for, or against, the test version being better, it may also have come back inconclusive or not statistically significant.

No problem, in most cases you can simply keep running the experiment. More time often leads to more clicks and any minor variance should continue to grow to a level where a meaningful difference can be measured.

If you are seeing some promise in the results but still want to continue the experiment you might want to increase the % of traffic flowing into your test link. This allows you to continue to learn from the experiment but also, hopefully, see a higher net reward from your traffic.

Next Steps

Congratulations! You made it through our guide and are now ready to run your own experiment. Big or small, we strongly encourage you to dive in to start learning ways to better maximize your affiliate marketing efforts.

Work Together?

If you are interested in running some experiments and would be okay with sharing your results we’d love to team up with you.

We can provide you with Geniuslink account credits in exchange for access to your data and approval to publish the results.

We are excited to help move the industry forward and would be delighted to work with you to do exactly that!

If this is of interest, please reach out and let us know!

Author

-

Jesse is a Native Montanan and the co-founder and CEO of Geniuslink - or, as he likes to say, head cheerleader. Before Jesse co-founded Geniuslink, he was a whitewater rafting guide, worked at a sushi restaurant, a skate/snowboard shop, was a professional student, and then became the first Global Manager at Apple for the iTunes Affiliate Program.

Author

-

Jesse is a Native Montanan and the co-founder and CEO of Geniuslink - or, as he likes to say, head cheerleader. Before Jesse co-founded Geniuslink, he was a whitewater rafting guide, worked at a sushi restaurant, a skate/snowboard shop, was a professional student, and then became the first Global Manager at Apple for the iTunes Affiliate Program.

Related posts

Can you Include Affiliate Links in Emails?

Amazon Associates Italy Guide: Account Setup, Amazon OneLink, Taxes, & More

Amazon Associates Germany Guide: Account Setup, Amazon OneLink, Taxes, & More

How to Create Affiliate Product Reviews (With the Help of ChatGPT)

More revenue from every link you share

Geniuslink makes localizing, tracking, and managing smart links dead simple, so you can earn more without added work.